After the emergence of artificial intelligence in recent years, robotics is considered the next revolution globally. The combination of the two technologies can represent a before and after in medicine, as demonstrated by the Spanish robot surgeon equipped with four arms capable of holding different tools and rotating them 360 degrees. The most advanced device in the world in this sense is the da Vinci surgical system, designed so that a surgeon can remotely control and perform very precise tasks such as dissecting, suctioning, cutting and sealing blood vessels.

These robots are not infallible, but they still have immense potential in the sector, despite their high price, which can reach 2 million dollars in their most updated version. To date, more than 10 million surgical procedures have been performed with the 7,000 Da Vinci systems around the world, and 55,000 surgeons have been trained to operate them. However, there are those who seek to take a further step towards automation: they want train them so that they can function independently and save doctors work

This is what a team of researchers from Johns Hopkins University (JHU) in Maryland (USA) and Stanford University are proposing, who have shown how they could be train these devices from videos of other operations to perform “the same surgical procedures as skillfully as human doctors,” according to the university’s press release.

“It’s really magical to have this model and all we do is feed it data from the camera and it can predict the robotic movements needed for surgery,” said Axel Krieger, lead author of the research and associate professor in the university’s mechanical engineering department. the JHU. “We think this means an important step forward towards a new frontier in medical robotics“.

Krieger and his colleagues successfully demonstrated the possibility of learning by imitation, without requiring subsequent corrections. Thus, through several experiments, enabled Da Vinci systems to efficiently learn complex surgical tasks and are able to adapt to unforeseen scenarios.

Learn by imitation

The company Intuitive Surgical first introduced these robots to the market in 2000 and has since released different versions and updates. Most have a central surgical console from which the surgeon obtains detailed images of the area to be operated on and controls the robotequipped with various arms and tools.

In this case, the JHU and Stanford researchers used two arms ending in small pliers, commonly used to make sutures. Using a machine learning method, Krieg and his team successfully trained the system to autonomously perform three common tasks in a simulated abdomen (with pork and chicken samples). surgical procedures: handling a needle, lifting body tissues and suturing.

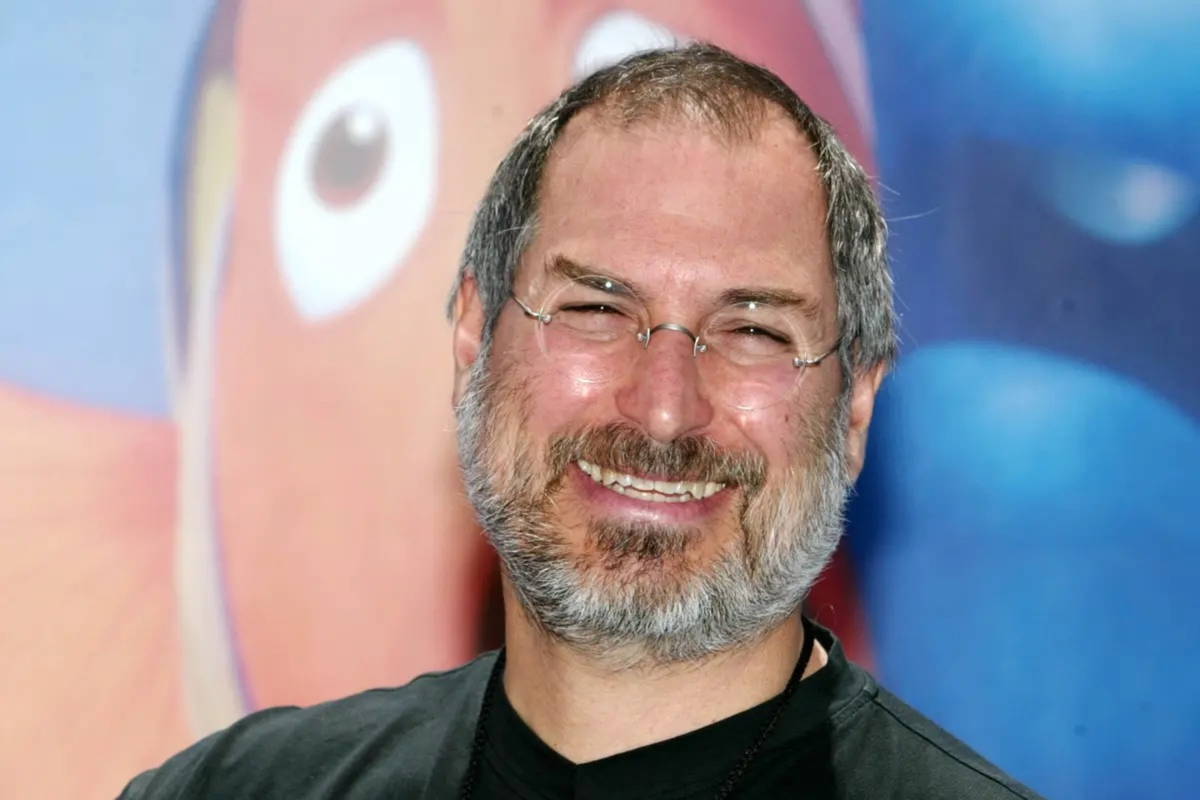

Josh Hopkins University Robot Surgeon Demonstration

Until now, this type of “programming” of the autonomous functions of surgical robots relied on individual training of actions, which could take an unaffordable amount of time and give fairly imprecise results. “Someone You could spend a decade trying to model suture. And that’s only for one type of surgery,” Krieger says.

To solve the problem and reduce medical errors, these engineers combined imitation learning with machine learning architecture on which AIs like ChatGPT are based. If OpenAI’s generative artificial intelligence works with words and text, the Johns Hopkins engineers’ model does so with kinematics, breaking down into mathematical formulas the angles at which robots can move their arms and tools.

wrist cameras

In order for the da Vinci system to perform the tasks perfectly on its own, with dexterity and ease similar to those of human doctors, the AI was fed with nearly 1,000 videos of the different tasks. This material, usually used for postoperative analysis and recorded with tiny wrist cameras mounted on robotic armsnow constitutes a valuable database to feed the model.

“All we need is a picture and this artificial intelligence system finds the right action“, explains another of the lead authors of the study, Ji Woong ‘Brian’ Kim. “We found that even with a few hundred demonstrations, the model is able to learn the procedure and generalize to new environments that it has not met.”

An operation with a da Vinci surgical system

Omicron

Indeed, throughout the experiments the system has proven capable of correcting its own errors on the fly. “For example, if he drops the needle, he automatically picks it up and continues. That’s not something I taught him to do,” Krieger says.

The findings, presented at the prestigious Robot Learning Conference this week in Munich, lead us to believe that Imitation learning will make it possible to train these surgical robots in a few days (instead of months or years) so that they can perform complex surgical procedures without human intervention in the future.