One of Apple’s obsessions is Security of its users. We all know how much the company cares about the privacy of its customers, something that’s sacred to Apple. Even if he has to face the US government, or even the CIA, to do so. “My users’ data is not affected” is their motto.

And now it has focused on the safety of its underage users. He will do “Big Brother»Monitor images passing through its servers, both when sending messages and photos stored in iCloud, for photos containing child sexual abuse material. Well done.

Those in Cupertino have just announced this week a series of measures that they will implement in order to protect underage users of the iPhone, iPad Yes Mac. These include new communications security features in Messages, improved detection of child pornography (CSAM) content in iCloud, and updated knowledge information for Siri and Search.

I mean, what do you think examine each photo users under the age of 13 who pass through its servers, whether sending or receiving Messages, or those stored in iCloud, to detect those who are suspected of child pornography. Once the suspicious image is automatically located, it will be flagged for someone to verify. There will also be controls on searches and Siri.

Photos attached to messages

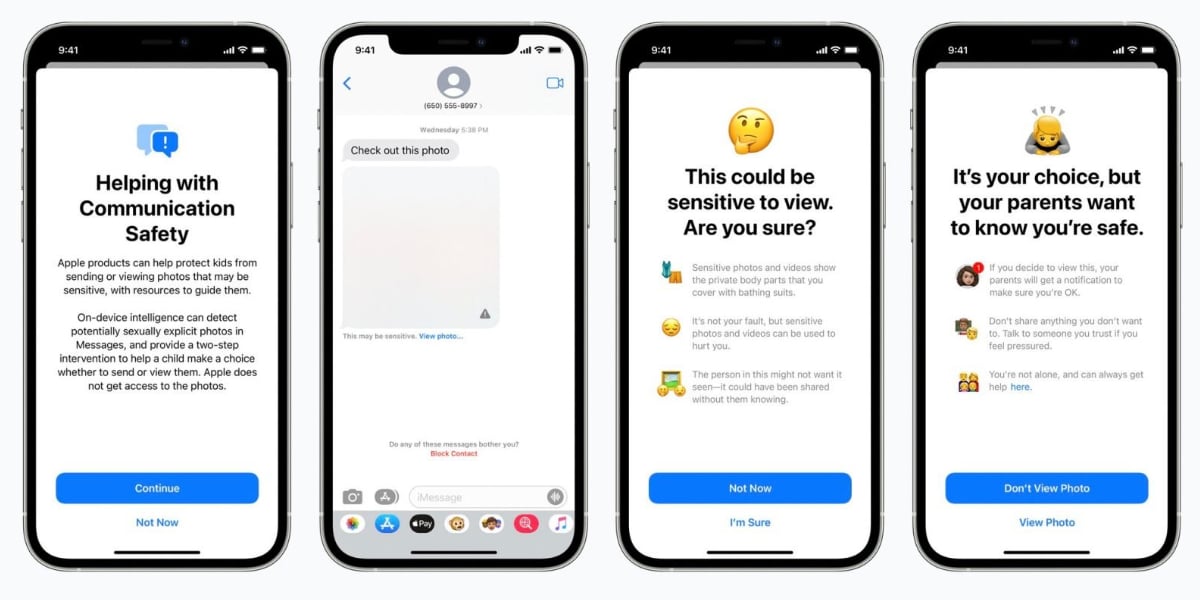

Apple explains that when a minor who is in a ICloud Home receives or tries to send a message with photos with sexual content, the child will see a warning message. The image will be blurry and the Messages app will display a warning that the image “may be sensitive”. If the child taps “Show Photo”, they will see a pop-up message informing them why the image is considered sensitive.

If the child insists on seeing the photo, their iCloud Family dad will receive a notification “To make sure the visualization is correct.” The pop-up will also include a quick link for further help.

In the event that the child tries to send an image that is qualified as sexual, he will see a similar warning. Apple specifies that the minor will be notified before sending the photo and that parents can receive a message if the child decides to send it. This check will be done in Apple ID accounts that belong to children under 13

Photos in iCloud

This is how Apple will treat photos of a child under 13.

Apple wants detect CSAM images (Child Sexual Abuse Material) when stored in iCloud Photos. The company will then be able to report a report to the National Center for Missing and Exploited Children, a North American entity that acts as a comprehensive reporting agency for CSAM and works closely with law enforcement.

If the system finds a possible CSAM image, it flags it as verified by a real person

The images that are saved on the device and do not pass through the iCloud servers, obviously cannot be controlled by Apple. All this child control system it will first be implemented in the United States., and later it will be extended to other countries, starting with iOS 15, iPadOS 15 and macOS Monterey.

Searches and Siri

Siri will be aware of any research a user can perform regarding the CSAM Theme. For example, those who ask Siri how they can report CSAM or child exploitation will be directed to resources on where and how to file a report, thus facilitating a possible prosecution.

Personalities like John clark, President and CEO of the National Center for Missing and Exploited Children, Stephane Balkam, founder and CEO of the Family Online Safety Institute, former Attorney General Eric Holder or the former Deputy Attorney General Georges terwilliger They expressed their full support for Apple’s initiative.