It seems that Apple has decided to stop its plan the CSAM revisions that it was considering implementing photographs of its users looking for child pornography. There is no longer any trace of the controversial plan on Apple’s website.

We don’t know if this is a temporary withdrawal at this time, looking for the best way to implement the system, or if it is just shutting down the project. The point is that the CSAM plan has disappeared from the child safety page of the official site

For a few months now, we have been talking about the controversial project that Apple had in mind, to increase the security of the digital content of its devices. It is called Planning the CSAM of Apple.

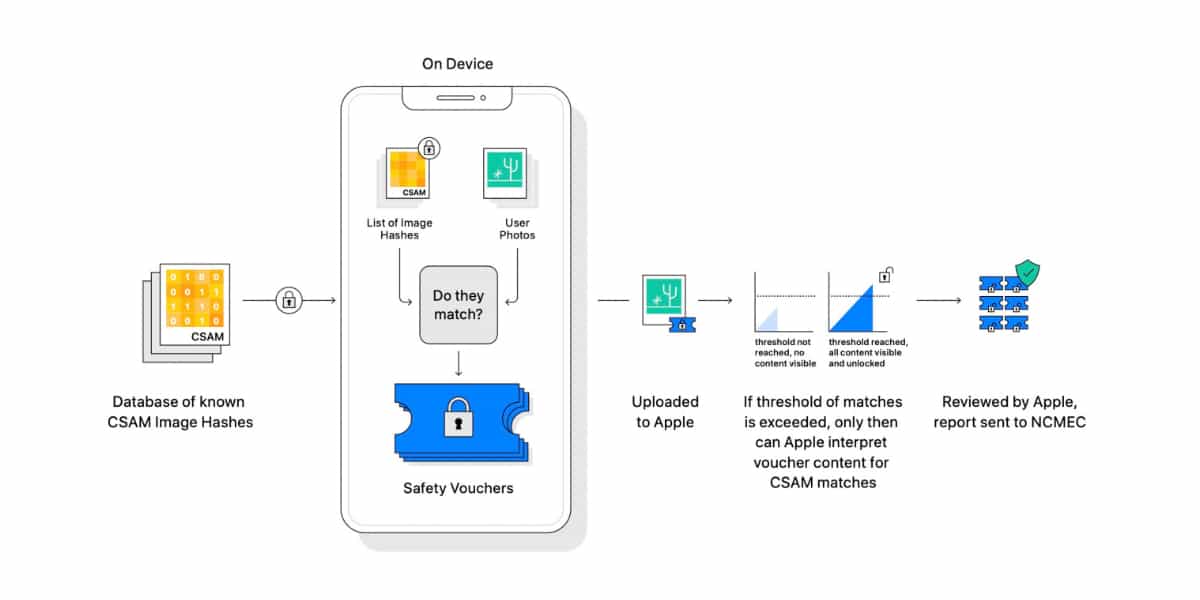

This plan wanted to use a combined system first automatic then manual called neuralMatch to discover suspicious child abuse images in the private photo libraries of users who keep them in iCloud.

The system intended to do an automatic digital scan of all photos that a user saves in their iCloud account. If the server software detected a possible “suspicious” photo containing child pornography, it alerted a team of human evaluators

While said reviewer saw possible child abuse in the image, Apple I would communicate it to the local authorities relevant. The company’s intentions were obviously good, but the confidentiality of people.

Shortly after Apple announced its CSAM plan, it faced a deluge of criticism from privacy groups, rights groups and privacy organizations. . Right to privacy

Project stopped, not rejected

Now apple erased from its Child Safety page on its official website any indication of the CSAM project, the launch of which is planned in a future version of iOS 15. According to The Verge which has just been published, Apple has not abandoned the CSAM project, but wants to see how it can modify it so that it is accepted by all these opponents. Difficult he has.