With the new Galaxy S24 announced by Samsung, a new era in which AI takes a central place in a smartphone, and with the launch by Google of two new features to make search more natural. One of these two new features will completely change the way we approach interacting with a mobile phone, or its screen: a circular gesture to search in any application.

This gesture had already leaked a few weeks ago to be one of the most notable surprises of the Galaxy S24. Especially for how intuitive it is to circle what you want to search for on Google. This is a new action that you will have to get used to to be able to use it in any application installed on your mobile, whether it is a YouTube or TikTok video, or an image of any any social network.

Google announced two new features for search that aim to make it more natural and intuitive and also cover that very human need known as curiosity. And this curiosity is going to be covered by a gesture called “Circle to search”, and the possibility of using Google Lens to take a photo and use it to make any query in the search engine.

Circle to search

The first novelty is the new gesture that can be performed in any application installed on the mobile. In other words, with the finger of your hand you can draw a circle on any image or video generated on the screen on your mobile phone to search. First, you must do a long press on the taskbar to activate the ‘Circle to search’ gesture.

Which means if you’re watching a video on YouTube and suddenly want to identify the breed of one of the dogs that appears, or the brand of the streamer’s shirt, an immediate search can be performed with a simple circular gesture. Everything that appears on the screen can be searched like in chat apps like Google Chat or Google Messages; Texts can also be used for searches, as Google demonstrated in its announcement.

Image of the new Google search gesture

Free Android

This new “Circle to search” gesture will be Available globally on Samsung Pixel 8, Pixel 8 Pro and Galaxy S24 series. Google has also confirmed that it will soon reach the rest of the devices, although it has not given any approximate date, so it remains like those exclusives that they usually launch on their Pixels and that at the same time go to Android.

Generative AI for blended research

Generative AI in conjunction with blended research This is the other big new feature that will be available today in the United States for English users. Google says it will reach other regions and languages soon, but has not given a date.

Image of the new gesture made on a Google or Samsung mobile

Free Android

Mixed research and Gen AI enable use an image to query about it. That is, the mobile camera is used through Google Lens to take a photo of a plant, and by clicking on the icon on the screen you can perform this search: “when should I water this plant?

Which means that the Google search engine, thanks to the use of generative AI, will “understand” what is in the image to perform all types of queries. In fact, in the announcement made by Google, several examples were given to explain the complexity of a very easy to use tool and this will open up new horizons for users in their search experiences.

Another example given is the photograph taken of a geode so that when searching with Gen AI I asked why it had that purple color. The Google search engine gives the exact and precise answer to make it clear that this new feature will completely change the way we look for answers to the search engine’s questions.

Gemini on the Galaxy S24

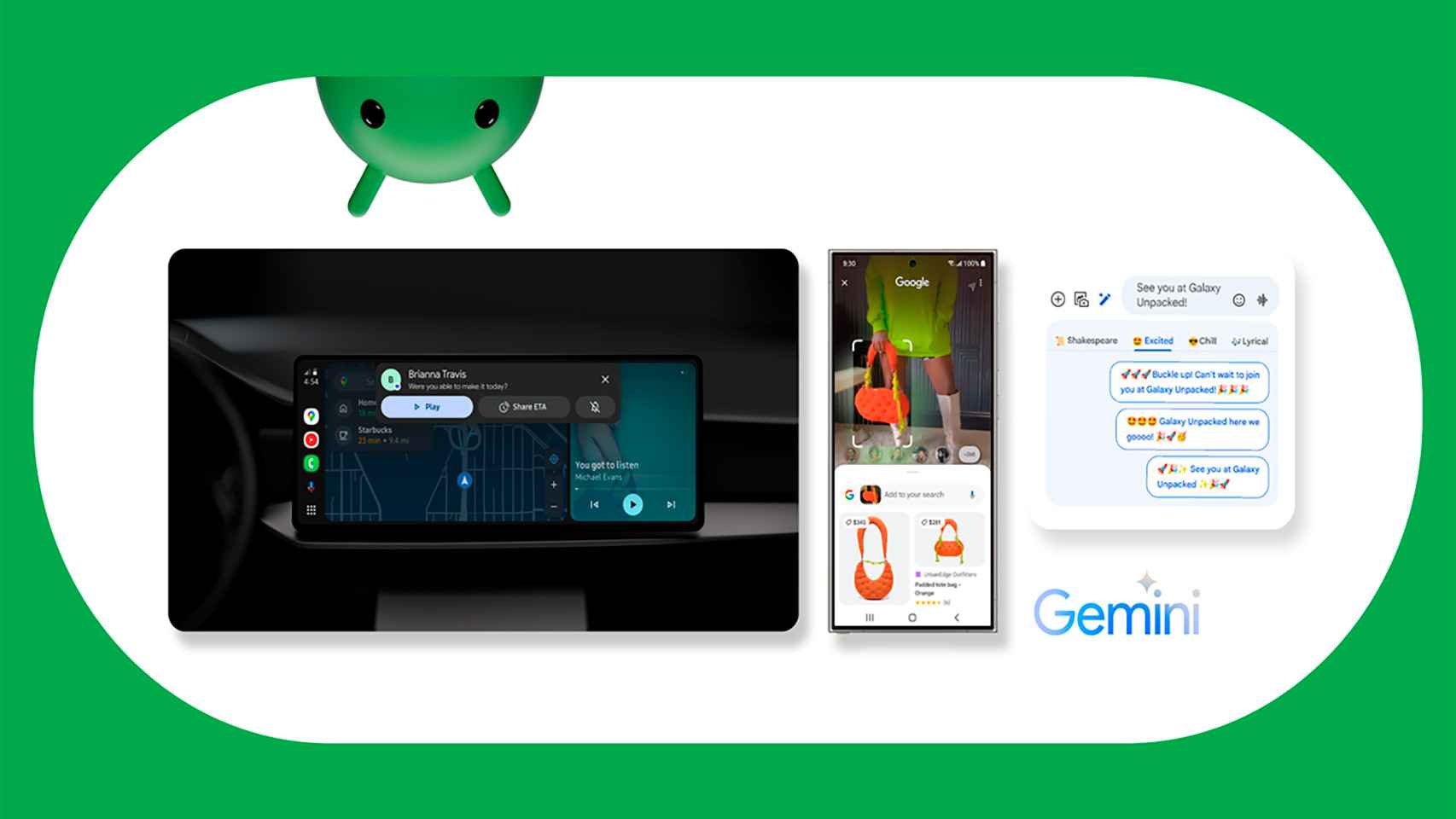

Late last year, Google announced Gemini, its most powerful AI model. It is available in the new Samsung Galaxy S24 which was just announced today for offering new experiences through applications and services from Samsung.

Applications Notes, voice recorder and Samsung keyboard of the Galaxy S24 series receive functionality through the use of Gemini Pro, Google’s best model for a wide variety of tasks. Here are some of its capabilities to better understand what the inclusion of Gemini in the Galaxy S24 means:

- The voice recorder can record a lecture for Gemini AI to provide a summary of the most important points.

- Image 2, Google’s image-from-text technology, lets you use the generative editing from the Picture Gallery app.

- Gemini Nanothe most effective model running on the device, allows you to use Magic Compose in the Google Messages app to compose messages in different tones.

- Photomoji to create new emojis from user’s photos with generative AI.

AI in Android Auto

There is another application that receives generative artificial intelligence to make driving easier: Android Auto. Google explains that now AI will be able to automatically summarize busy texts or group chats while the user has their hands on the wheel.

The objective is that the driver’s attention is always on the road and Android Auto generates the least distractions. Indeed, this application will be able to offer responses and actions adapted to the context. Google gives an example to better understand how it works:

- A friend sends a link via a chat app to go eat together at a restaurant.

- The driver will only have to tap once to activate the route to the location, send the estimated time of arrival and even make a call.

Gemini and Android Auto

Free Android

There is another new feature for Android Auto and Samsung mobiles: The interface design will be adapted to follow the same as the icons and wallpaper of the phone. A curious detail that adds more value to this close collaboration between Google and Samsung to offer the new Galaxy S24 more unified experiences, as is the case with Android Auto.

This may interest you

Follow topics that interest you

Table of Contents