Google announced the Pixel 4 lox in October last year with the emphasis on one of the longest-running phone technologies: Soli. By using radar, added to a series of different sensors, Google has been able to provide the system with a system. it can detect and interpret mobile gestures, to act accordingly.

When analyzing the Google Pixel 4 XL we urge you to, although we do not know, the system Motion Sense based on Soli technology There is still a long way to go, especially in terms of performance. However, in the short term it was one of the favorite activities of a good number of Pixel 4 owners, especially as this same technology was in charge of enabling part of a decent face-open system integrated with series phones. Pixel 4.

Now, several months after the launch, and many are already waiting for the arrival of the new Pixel 4a, Google wanted to explain more about how Soli's technology works

Google explains what creates the Pixel 4 & # 39; s Motion Sense system

In this post, Google explains how Pixel 4 is capable see when a user reaches for the phone to pick it up and automatically opens the screen, or when you move your hand from one side of the screen to the other pass the song.

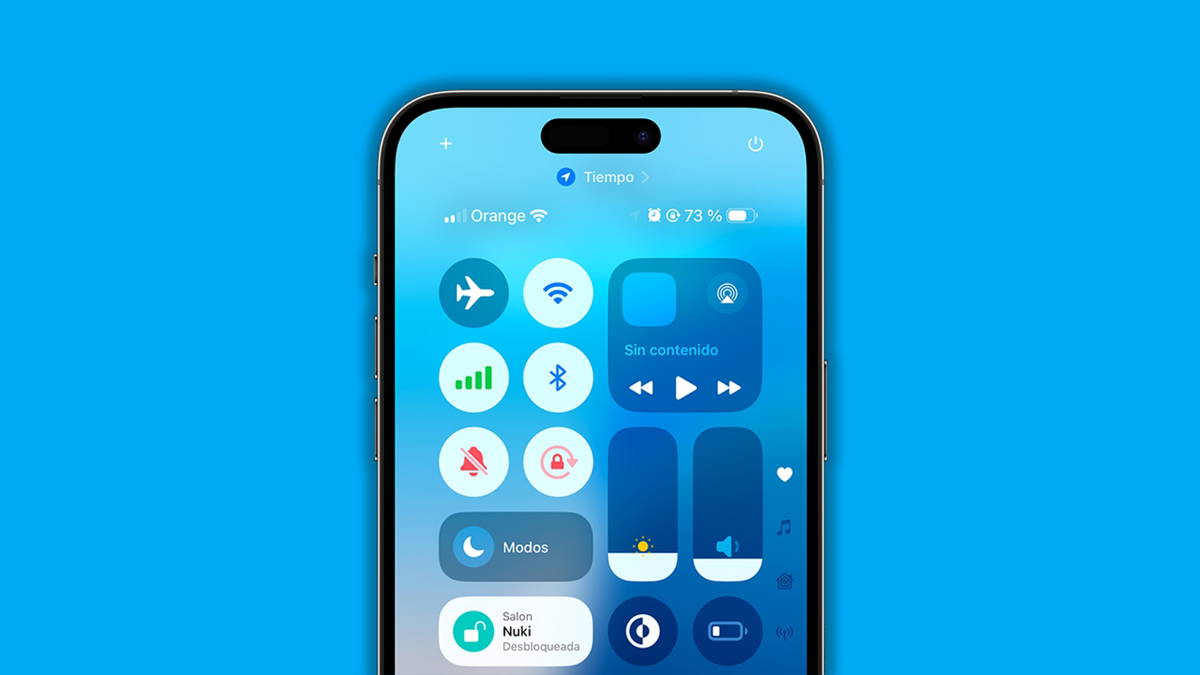

With a successful illustration – available under these lines -, you see how the image is obtained with the Soli radar system when movement is made. Logically, the image is at the lowest possible level of resolution – on the other hand, which helps the system not put any risk in the dark. However, Google explains that it is not necessary to get as much information as possible to see the movement and translate it into models produced from machine learning.

Google explains that, depending on the distance of the subject causing the movement and radar itself, signal strength received by Soli is minimal. In pictures, the intensity of a signal is reflected by a greater or lesser degree of light. As they explain, in the three photos shared, the one on the left shows that the radar could "see" when one person moved closer to the cell, the second the arm extends to receiving the call, third, the action from one side to the other is used to change songs.

Another curiosity of this system is this the process that Google uses to train this technology. To do this, a model of intelligence was used outline TensorFlow trained with a million touches made by thousands of participants. Apparently, thanks to this machine learning process, the Solution can also "filter" disturbances generated by the device itself, such as vibration or movement produced by the phone speaker while playing music.

No matter how useful or small this technology is today, it's amazing how Google has been able to miniaturize and give life to technologies like Soli after years of development, the last to create technology. an incredible system of sound control

Follow Andro4all

About Christian Collado

Growth Editor at Andro4all, specializing in SEO. I am studying software development and writing about technology, especially the Android world and everything related to Google since 2016. You can follow me On Twitter, email me if you have something to tell me, or contact me via my LinkedIn profile.

My work group: