Apple doesn’t really like to use the words “Artificial Intelligence” or “AI”, more often associating them with the more generalized term “Machine Learning”. But call it ML, AI or whatever you want, it has roots in all Apple products, especially iPhone. It is used in the Photos app, camera, keyboard, Siri, Health app and much more.

But it is often used in seemingly invisible ways. We don’t think about facial recognition when Photos aggregates images of people for us. We have no idea how much AI is at play every time we press the shutter button to get a great shot with such a tiny camera sensor and lens system.

If you want a truly obvious example of AI on your iPhone, look no further than the Magnifier app. Specifically, object detection mode. Point your iPhone at objects and you’ll get a description of the object and generally how it is located, all using AI fully integrated into the device. Here’s how to try it.

Enabling detection mode in Magnifier

First, open the Magnifier app. You may have added it to the iPhone 15 Pro’s Control Center or Action Button, but you can also find it in the App Library or through search.

The app is designed to help people see close-up details or read fine print and as an aid to visually impaired people. I use it all the time to read the small ingredient text on food packages.

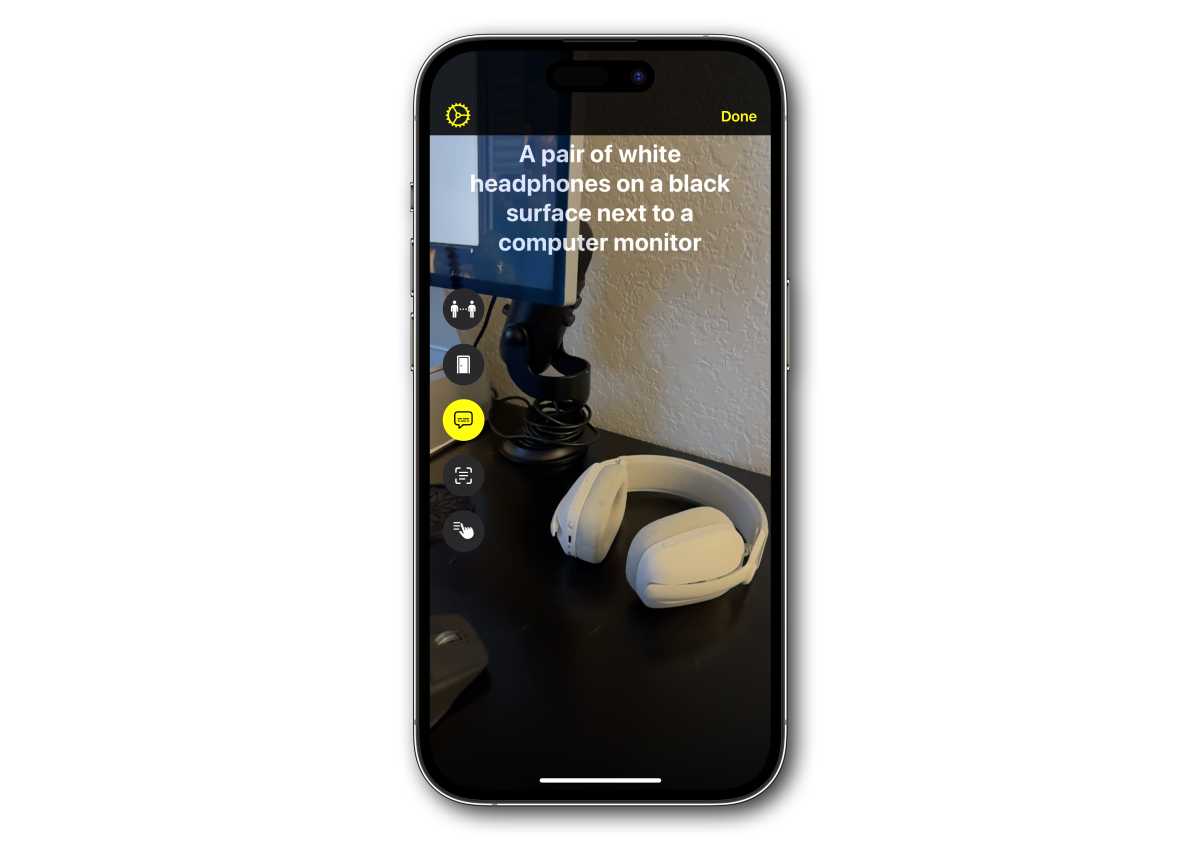

From there, tap the bracketed box on the far right (on iPhones with LiDAR), then tap the speech bubble button on the left. If your iPhone doesn’t have LiDAR, you’ll just see the text bubble button. Don’t you see the buttons? Drag the zoom slider up to reveal them.

Foundry

You will now be in detection mode. Equipping Settings will give you the ability to view text and/or hear voice descriptions of whatever you point at.

Watch AI at work

Now just start pointing your iPhone at things! You will see a text overlay of everything visible: animals, objects, plants, etc. It can sometimes take a split second to update, and it’s sometimes a bit wrong (and sometimes hilarious), but the analysis of the images shown here is impressive. You’ll get adjectives to describe not only colors, but also positions and activities.

Look at this:

Foundry

He recognized not only that it was a cat, but also a sleep cat, and that he was not only in a bed, but in a pet bed.

Notice how in the following example he was able to describe the headphones (white), their position (on a black surface), and its relationship to another detected object (next to a computer screen).

Foundry

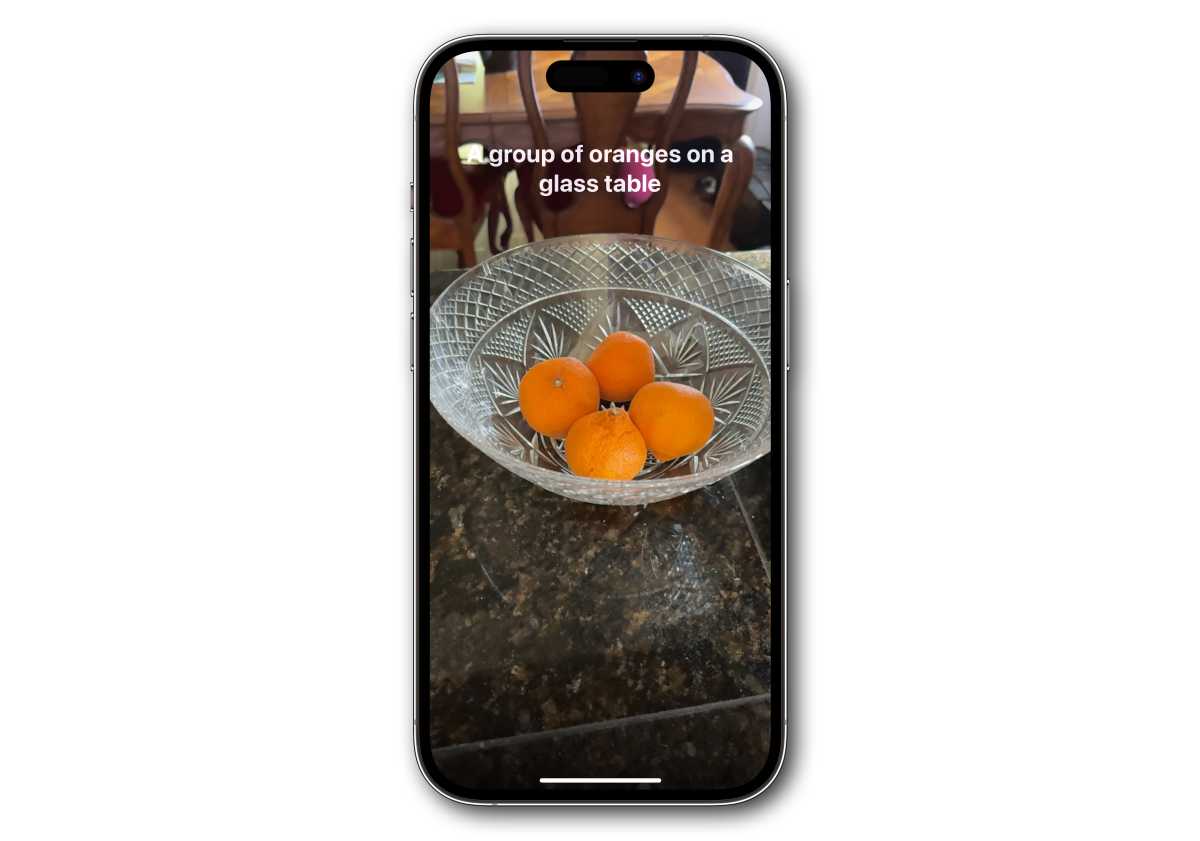

Of course, things go wrong sometimes. It’s not a glass table, it’s a glass bowl. Perhaps the chairs in the background were confusing, or he couldn’t determine the shape of the bowl due to all the facets and angles.

Foundry

But indoors or out, I’m constantly amazed at how well this feature is able to describe what it’s looking at. And here is the trap: it’s entirely on the device. Seriously, try putting your iPhone in airplane mode and it will work the same way. You don’t send video streams to the cloud; no one has any idea where you are or what you’re doing.

The accuracy of this object detection and description is not very impressive compared to AI routines that run on giant servers in the cloud, taking longer with massive processing power and huge models object recognition. Big Tech has been able to surpass this with cloud computing models for years.

But this is just a relatively minor feature that works easily on any modern iPhone, even ones that are several years old. This is a great example of what Apple is capable of in the field of AI and what, with better code and more training, our iPhones could be capable of in the future, while respecting our privacy and our security.