Apple has advanced some features to us that we will release with iOS 17 and one of them has had a big impact: Personal Voice. An accessibility option that can reproduce your voice using artificial intelligence so your iPhone, iPad or Mac can speak for you. What can it do? How it works? We explain everything below.

What is the personal voice?

Personal Voice is a new feature that we will launch with iOS 17 and will be found in the Accessibility menu of our devices (iPhone, iPad and Mac). Thanks to artificial intelligence, you can create an artificial voice similar to your own, and all this with the sole use of your iPhone, iPad or Mac, without other devices and in just 15 minutes. You can not only do it with your own voice, You can also do it with the voice of other people, for example deceased relatives

Live Speech

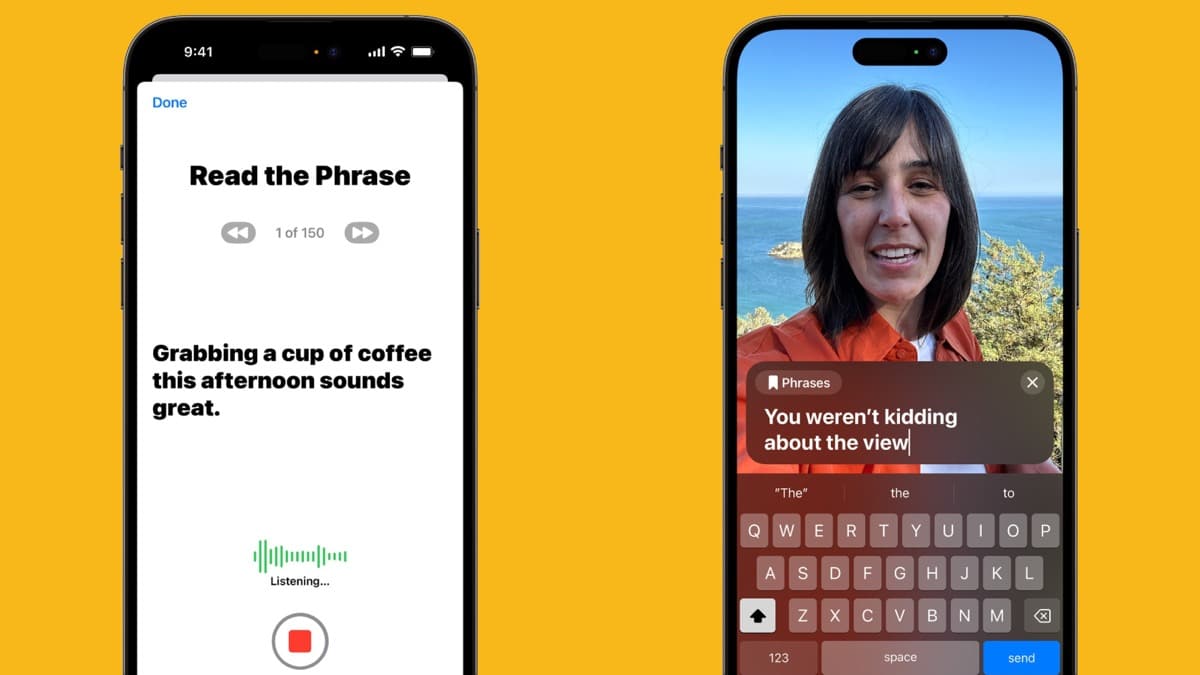

In Personal Voice we find another very interesting option that Apple has named Live Speech, which will use your voice already created through Personal Voice to read the texts that you have previously written during a phone call or FaceTime. That’s to say, You can make phone calls or video calls even if you can’t speak, because you can write the text you want to say and your caller will hear it as if it were your own voice., mimicking your accent and tone. You can even leave previously written sentences, such as greetings or goodbyes, to “say” them simply by touching the screen, without having to write them again.

Requirements

To use Personal Voice, you must meet the following minimum requirements:

- And iPhone or iPad with iOS/iPadOS 17 the superior

- And Mac with Apple Silicon processor and macOS 14 the superior

For now, Personal Voice will be only available in Englishs but it will be extended to other languages shortly.

Who is the personal voice for?

It’s a feature in your device’s Accessibility menu, it’s not something that’s intended for all users, although anyone who wants to can use it. According to Apple, this new feature is for the millions of people around the world who cannot use their voice or who will lose their voice over time. For example, patients with ALS (amyotrophic lateral sclerosis) or any other patient who has progressively lost their voice. We remind you that the user must first have recorded his voice for the system to work.so it won’t work for those who have already lost their voice, but for those who are at risk of losing it over time and can anticipate it.

privacy

One of the questions that many of you are surely asking is what privacy guarantees Apple offers us with this function. As always, Apple ensures that all the procedure is done on our device

How is it configured?

You will have to read aloud randomly chosen sentences that appear on the screen of your device. The procedure requires about 15 minutes of practice to get the created voice close enough to your own, but you won’t have to do it in one try. If for some reason during training you have to leave it, you can pick it up later where you left off. Once this procedure is complete, not everything is done, now all the data must be analyzed within the device itself, for which it may be necessary to leave it on charge overnight.

As we said before, you will not have to perform this procedure on all your devices, but to do it You need to enable sync via iCloud expressly. If you do not want this to be the case, you must perform the training in each of them.

Table of Contents