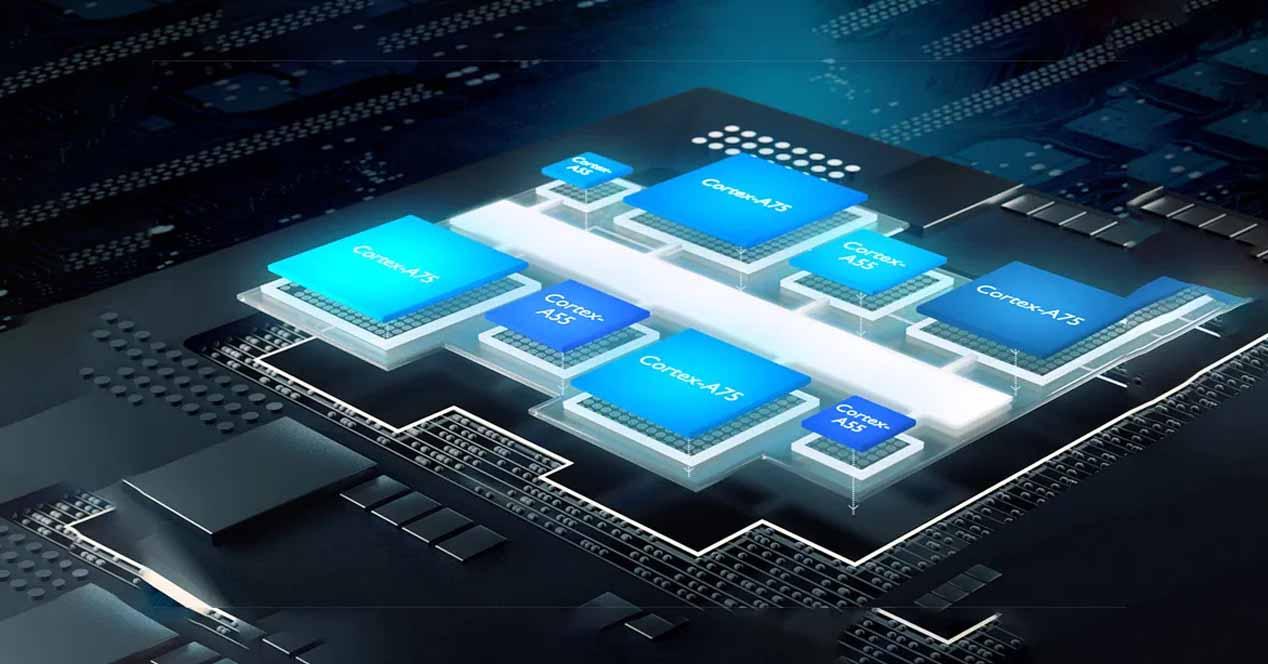

The concept of this architecture is very simple and at the same time efficient: we have a matrix with two types of hearts, some small which are always active and which take care of light tasks like surfing the Internet or writing in Word, and other larger and more powerful Cores, which are usually down and waiting for a task that requires higher power to activate and take charge of everything. This big.LITTLE architecture is used in processors, where the number of cores is quite low, especially compared to a GPU, where the number of hearts is counted in thousands.

NVIDIA Optimus was already sort of big.LITTLE on GPUs

Many of you will remember the days when almost every laptop with a dedicated GPU had NVIDIA Optimus technology. This technology meant that when we were in the office or doing tasks that were not in 3D, the graphics card built into the processor was used to save power, but when we were running a game or a 3D task the dedicated graphics were used to be able to have all the power. available.

This nifty way of taking advantage of the fact that the notebook had two GPUs is, in essence, kind of a big SMALL but raw mode (instead of being an entire architecture), but the idea is basically the same: when the GPU not necessary “Big” is off and on standby, the iGPU doing all the work while it can, saving energy and reducing heat generated. When needed, the “big” GPU kicks in and offers optimal performance.

So, is this something that can be implemented?

Of course, the idea is very good, but they either integrate two GPUs on the same PCB for that same functionality that we talked about before, or things get complicated enough, and it is not the same to work with the 4-16 Cores that a processor can have as many thousands of GPUs (to put this in context, a Radeon RX 6800 XT has 3840 Shader processors (cores), while an RTX 3090 has a whopping 10,496 CUDA cores) .

For a full implementation of this architecture, it would be necessary to redefine the operation of the GPU, because currently they already have two types of ALU: one is used for simple instructions and whose consumption is very low, while d The others (SFU) are responsible for performing the more complex operations such as square roots, logarithms, powers, and trigonometric operations. They are not big kernels, but in fact they are already called differently (FP32 ALU) precisely for this reason.

So you see, actually both in the past with Optimus and today something similar to big.LITTLe is already implemented in the GPU, what happens is it’s obviously different, it works differently and it is not called that. However, as everything seems to be geared towards greater consumption efficiency lately, this is something we cannot rule out, and of course NVIDIA and AMD have the potential to make it happen. This would, of course, be an ideal situation to save energy and produce less heat, right?