A modern computer needs, among other things, a processor and a graphics card to function. The opening of this year’s Computer Show 2023 in Taipei, NVIDIA CEO started handing out sticks. More specifically, he has hit a big stick at intelsaying that the Reduced GPU remarkably the cost and consumption energy for artificial intelligence.

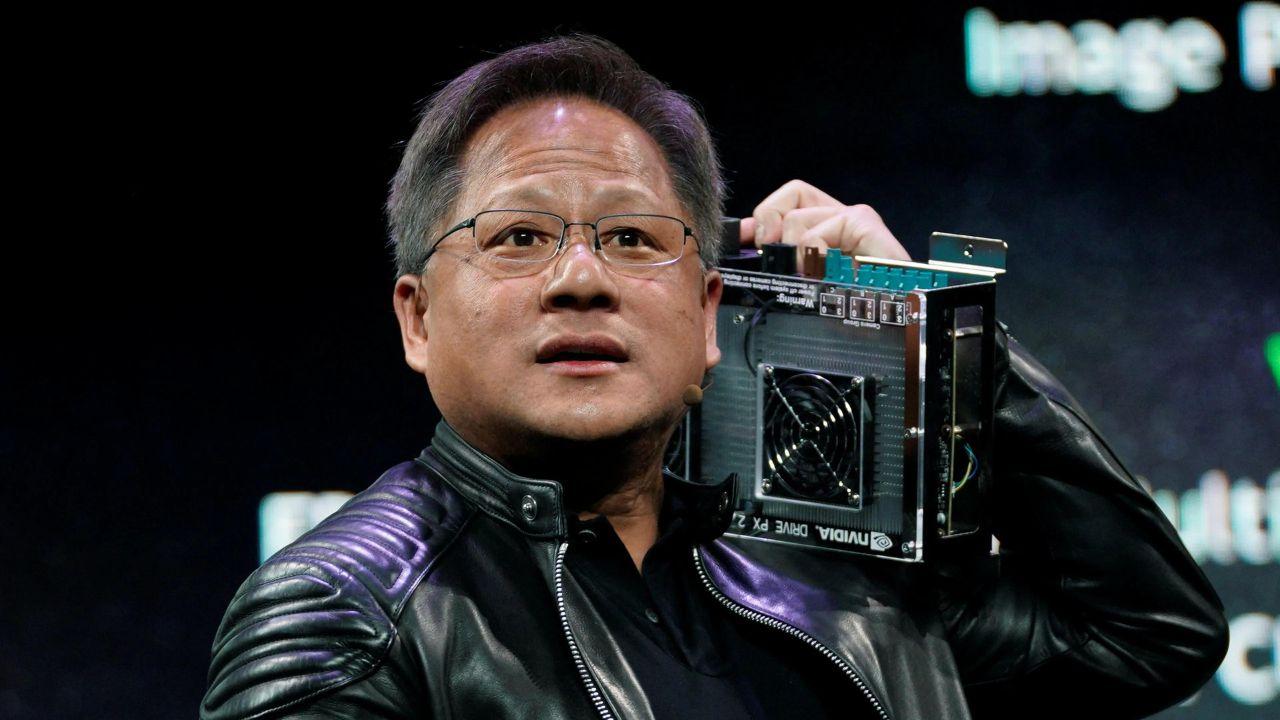

For those unaware, NVIDIA has positioned itself clearly and forcefully in the AI market. Jensen Huang, CEO of NVIDIA, was a pioneer in this sector, seeing the possibilities in the market. It offers very specialized solutions that offer great computing power with great efficiency.

Processors are very inefficient

During his speech, Huang directly challenged the CPU industry. He emphasized that heGenerative artificial intelligence and accelerated computing are the future computer science.

The big stick arrived when he asserts that the Moore’s Law is obsolete. He points out that future performance improvements will come from generative AI and accelerated computing. Come on, quite a blow for Intel, since Gordon E. Moore (who postulated Moore’s Law) is one of the company’s founders.

In order to consolidate their position, they demonstrated a analysis of the cost of accelerated computing (CA). They provided data on the total cost of a group of servers with 960 processors, which would be used for CA. All the infrastructure has been taken into account, such as the chassis, the interconnects and other elements.

According to the data they gave, all these elements costs about $10 million. Moreover, it is shown that the consumption it would be 11 gigawatts/hour.

Huang compares this data with a Cluster of 10 million GPUs dollars that would allow train up to 44 models accelerated calculation, for the same price. Not only that, they ensure that the consumption it would be 3.2 gigawatts/hour, almost 4 times less energy consumption.

According to Huang, a system What consume 11 gigawatts/hour could practice up to 150 models accelerated calculation. But be careful, this system would have a cost of 34 million of dollars.

But, in addition, it is established that training just a model accelerated calculation, a system of $400,000 What consume

He is keen to point out that training an accelerated computational model costs 4% compared to a CPU-based system and reduces power consumption by 98.8%.

So, do the transformers have their days numbered?

Not the truth. They are still needed today because they perform management functions, to put it very simply. What Huang tells us is that a CPU-based system is more inefficient and expensive than a GPU-based system.

Despite this, any server, cluster or advanced system requires a processor (or more). The big advantage of GPUs is massively parallel computing, which is beneficial for AI, which requires millions of operations per second.

What Huang is pointing out is pretty obvious, that x86 processors are pretty inefficient. This drives many people to explore RISC and RISC-V based solutions. These are more efficient architectures, but also much less powerful.