Comunicado de prensa

New

Intel and the Georgia Institute of Technology (Georgia Tech) announced today that they have chosen to lead the team in the Robustness of Artificial Intelligence (AI) verification system (GARD) for the Defense Advanced Research Projects Agency (DARPA). ). Intel is a leading contractor in this multi-billion dollar (US), affordable, four-year collaborative effort to improve cybersecurity protection against counterfeit attacks on Machine Learning models.

“Intel and Georgia Tech are collaborating to advance the collaborative understanding of the ecosystem and the capabilities and capabilities of Artificial Intelligence and Machine Learning. With new research in corporate strategy we are working to improve the acquisition of something and improve the ability of Artificial Intelligence and Machine Learning to counteract different attacks. "- Jason Martin, Intel Labs Specialist Developer and Principal Intel & # 39; s DARPA GARD Program Principal Investigator.

Why it matters

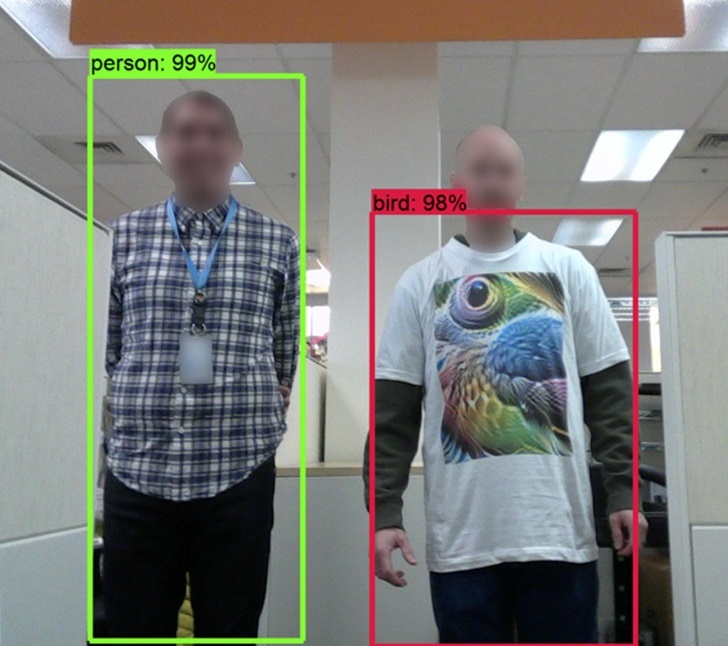

Although rare, different attacks attempt to mislead, alter, or corrupt the interpretation of the Machine Learning algorithm data. Since AI and Machine Learning models focus primarily on both private and public systems, it is important to improve robustness, security and protection from unexpected or misleading communications. For example, inaccurate understanding and misinterpretation of Artificial Intelligence in pixels may lead to misinterpretation of images and contextual clues, or subtle interpretations of real-world objects may confuse AI detection systems. The GARD program will help Artificial Intelligence and Machine Learning technology be better equipped to protect against future attacks.

Details

Current defense efforts are designed to protect against specific and predefined attacks, but remain vulnerable to attack when tested without specific design parameters. GARD aims to present a defense of Machine Learning in a different way: to develop broad defenses that deal with many potential attacks in situations that could cause the AA model to misinterpret or interpret non-New Byte information. Thanks to its extensive architectural expertise and security leadership, Intel is in a unique position to help drive innovation in the Artificial Intelligence and Machine Learning technologies with a significant contribution to the outcome.

The purpose of the GARD program is to establish the theoretical foundations of the Machine Learning program that not only exposes system vulnerabilities and demonstrates aspects of improving the system's efficiency, but also promotes the development of effective self-defense. Through these program elements, GARD aims to create a technology that prevents counterfeit machines with rigorous procedures to test their effectiveness.

Next

In the first phase of the GARD program, Intel and Georgia Tech are developing object detection technology through spatial, temporal, and interactive imaging, in both photos and videos. Intel is committed to driving AI and AA innovation and believes that working with trained security researchers from around the world is an important part of addressing security risks across the sector and our customers.

Table of Contents