Although its greatest novelty lies in the AMX unit, which is a Tensor type unit like that of GPUs, but with a difference, whereas in the case of graphics cards the set of registers is shared and therefore its operation is switched with the SIM units, the AMX is a full-fledged execution unit that can operate at the same time than the rest of the processor, because it has your own set of 1024 records for exclusive use.

Differences with the Intel Core Gen 12

The first thing we have to keep in mind is that the Sapphire Rapids architecture uses a good deal of the technologies that were also implemented at Alder Lake because they were manufactured under the same node: Intel 7, formerly known as the name of 10 nm SuperFin.

For what share the main core, Golden Cove or called P-Core in the company’s current slang. Instead, the fourth-generation Xeon Scalable Processor has no Gracemont or E-Core cores inside, but the differences do not end at this point, as it is very possible that we will see models with up to 20 cores per tile or 80 per processor.

However, there are some significant changes in Sapphire Rapids processors compared to their personal computer counterparts, the first of which is have the AVX-512 active

Although the biggest change is not in the central processing units, but in an accelerator called Data flow accelerator, which is an IOMMU unit that has been enhanced for use with hypervisors and therefore virtualized operating systems, making it designed for server side cloud computing platforms.

How fast will the Sapphire Rapids go?

At this time, we don’t know the clock speed of each of the Golden Cove cores in each Sapphire Rapids tile, but we do know that it will be slower than Alder Lake and not because of the fact that they said from Intel, but purely knowledge of the subject:

- AVX-512 instructions have higher power consumption than others, to compensate for this, when executing them, the clock speed is reduced.

- We are faced with a server processor, which in many cases will run 24/7 without interruption, cannot allow acceleration or run at high clock speeds.

- It has always happened that if we increase the number of cores of a CPU of the same architecture, the clock speed gradually decreases, this is because the cost of communication must be reduced.

If we compare with the current Ice Lake-SP based on Cypress Cove, we will see that their maximum clock speed is 4.1 GHz. very similar manufacturing process.

Crosshair limit exceeded

The title may seem confusing to you, but you should keep in mind that when designing a processor there is a limit in terms of size, that is, its area. The reason is that the more surface area a chip has, not only does less space per wafer make them more expensive, but also the number of errors that can appear is greater. So in the end it is not profitable to make them this size.

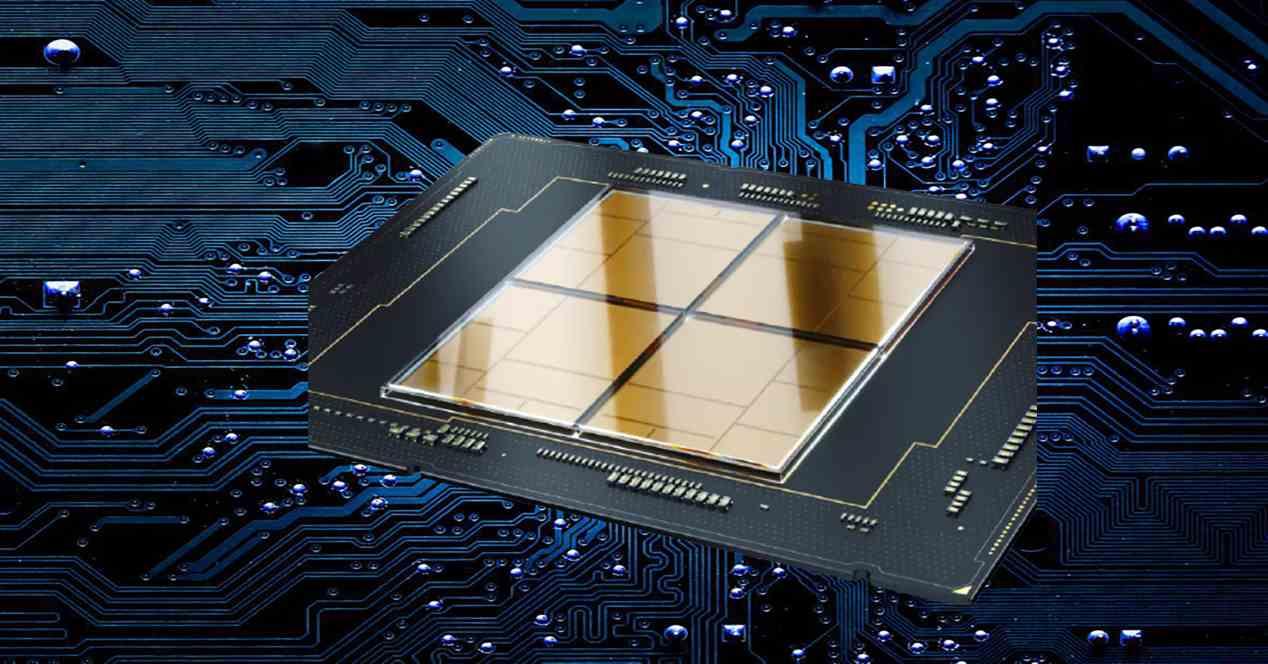

The solution proposed by the industry is that of chiplets, which consist in dividing a very large chip into several smaller ones that work as one. The advantage of this is that we can exceed the limit of the grid that we would have with a single chip by using several, which amounts to having in practice the equivalent of a larger processor, with more of transistors and therefore with greater complexity.

Of course, here is the problem of the cost of communication in connectivity. By separating the chips, we increase the wiring distance and end up increasing the power consumption of the communication. Let’s see how Intel did it in Sapphire Rapids.

EMIB in the next generation Xeons

The clearest solution is to shorten the paths, and for that, an interposer is used below, which is a communication structure responsible for communicating vertically with the processors and memories that it has in the upper part. Currently there are two ways to do this, via silicon pathways or ditto bridges, being Intel’s EMIB technology of the second type and it is responsible for the communication between the four tiles.

While in AMD Zen 2 and Zen 3 architectures the last level or LLC cache is in the CCD chip, in the case of Sapphire Rapids it is split between the different tiles. What is the particularity of this cache in each processor? Since the first to the last of the cores uses the same RAM memory, the top level cache is shared by all, it is global and not local and therefore each tile of the Sapphire Rapids architecture must have access to the part of the LLC that is in the others at the same speed as you would access yours.

What silicon bridges do is communicate the different parts of the last level cache that is in each of the tiles in such a way that there is only no additional latency. So what it does is reduce the energy cost of communication, in the end the effect is the same as having a single chip for practical purposes, but with no size limit in terms of surface area.

Support for CXL 1.1 in Sapphire Rapids

The CXL is going to be one of the most important standards in the years to come, unfortunately Sapphire Rapids does not support the entire standard. And what is this technology used for? This is an improvement over the PCI Express interface which provides cache consistency for processors, expansions, and peripherals. So they all share the same addressing.

The CXL standard has three protocols which are CXL-IO, CXL-CACHE and CXL-MEMORY. Its limit? It does not support the latest protocol and that means not only consistent PCIe RAM expansions are not supported, but also HBM2e memory of some processor models would not be in the same address space, well This is not the case even with Compute Express Link support since communication with high bandwidth memory is via four additional EMIB bridges, so they do not share the same memory space.

Table of Contents