NVIDIA has become the benchmark in the GPU market for training large language models (LLM) for Artificial intelligence and, according to the economic figures announced by the company a few weeks ago, this market is much more lucrative for the company than the domestic market, where it also constitutes a reference. Intel and AMD are doing everything they can to follow in their footsteps, although they still have a long way to go.

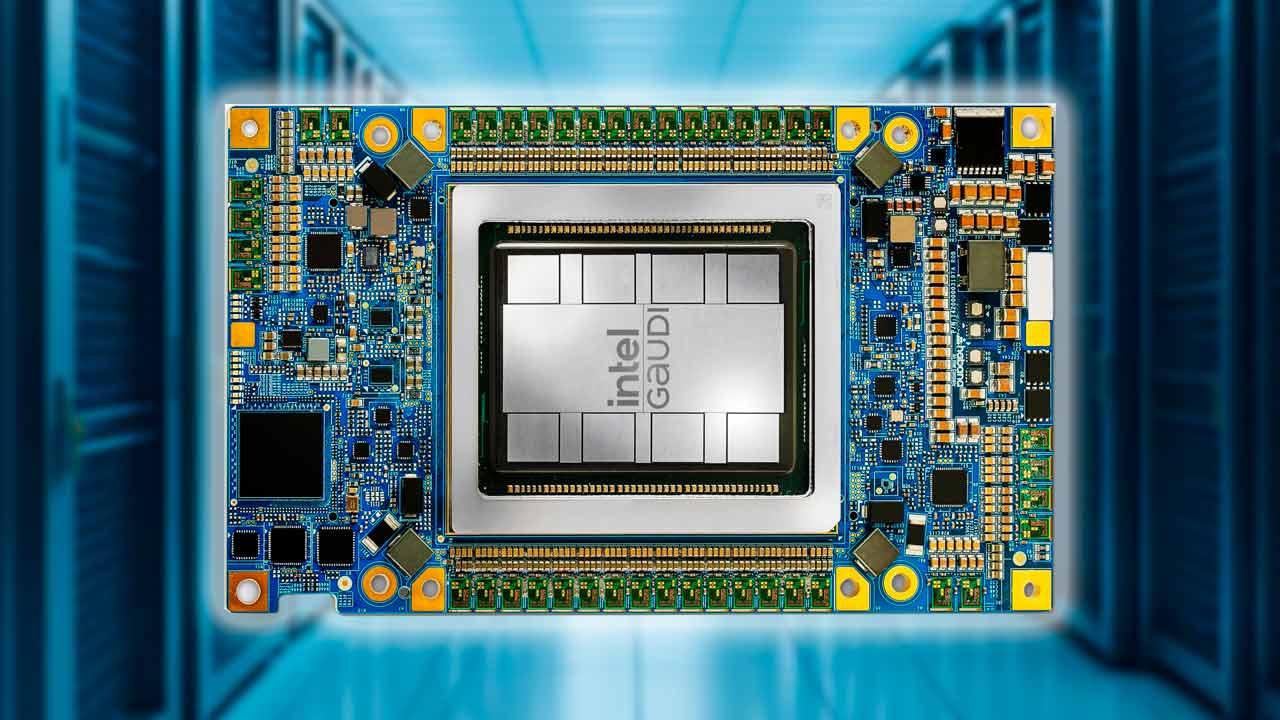

Intel’s latest proposal for this market is Gaudi3 its latest generation accelerator which has just been presented during the Vision 2024 event and which has specifications much higher than the previous generation, Gaudi2

In addition, according to Intel, they are much cheaper than H100 GPU from NVIDIA, which can mean significant savings since large companies don’t buy individual units of this type of GPU to train language models, provided they are up to the task in terms of performance.

Intel Guadi3 wants NVIDIA GPU market

Intel is so sure about Gaudi3 that during the presentation it compared its performance with the H100 and H200 based on NVIDIA’s Hopper architecture, but the accelerators were left out AMD MI300A/X which the company presented at the end of 2023 and which, according to its manufacturer, was more powerful than NVIDIA’s H100 GPU.

The Gaudi3 accelerator has two 5nm dies manufactured by TSM with 64 5th generation Tensor cores. This represents a significant increase compared to the previous generation whose number of Tensor kernels

As for memory, the Gaudi3 has 128 GB HBM2w which offers a bandwidth of 3.7 TB/s compared to the 2.45 TB/s offered by Gaudi2, which had 96 GB of HBM2e memory. This new Intel accelerator is available in form factor PCIe with the HL-388 model compatible with PCIe 5.0 and has a TDP between 450 and 600W, a very high figure for this format. The OAM model is also available in the HL-328, HL-325 and HL-335 versions with a TDP that varies between 450 and 900W compatible with air and liquid cooling.

Performance

As we can see in the comparison presented by Intel during the presentation, Gaudi3 is up to 1.7 times faster (depending on the language model used) for tasks of language model training compared to NVIDIA’s H100 GPU.

Regarding inference speed, performance can vary depending on the language, reaching up to 70% more. In reference to energetic efficiencya very important section in data centers and which the boss of NVIDIA does not take into consideration according to his latest statements, Gaudi3 is between 1.2 and 2.3 times more efficient.

Intel’s problem with Gaudi3 is that it is lagging behind since it compares the current generation of NVIDIA graphics GPUs when this giant presented the B200 GPU based on Blackwell architecturea model that includes almost 3 times more transistors than the H100 model and which will hit the market in the second quarter of 2024, when the launch of Gaudi3 is also planned.