Many people’s worst fear has come true for a Venezuelan woman and other users whose smart vacuum cleaner – in test – and its recognition of spaces caused images of her at home to end up on Facebook .

[Tu robot aspirador puede convertirse en espía: consiguen hackearlos para grabar a distancia]

This is an unprecedented event that has highlighted the danger that poor control over the privacy of images and sounds taken by this type of device in the houses.

And it is that, the problem would not have come from the device itself, which is a Roomba brand, but it would have come by ScaleAIthe company responsible for labeling data to train artificial intelligence.

Private images taken by smart vacuum cleaner are leaked on the internet

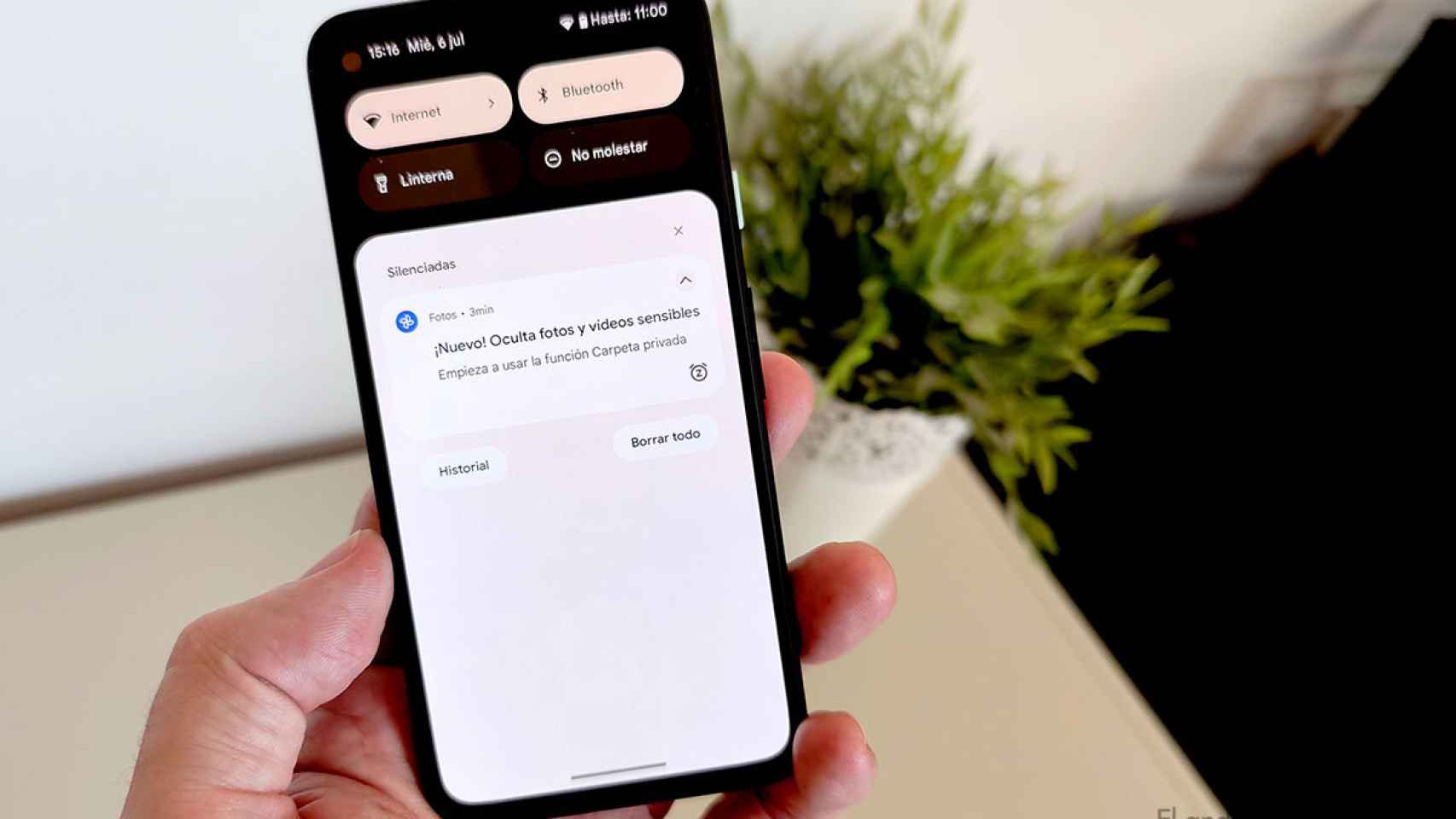

Image taken by a smart vacuum cleaner

MIT Technology Review

The free Android

Smart vacuum cleaners are often equipped with sensors and cameras that help them move around, avoid obstacles and map your home to create cleaning routes and more, but for that they have to save those images and train the AI with them.

Earlier this year, MIT Technology Review received screenshots of private photos posted to private social media groups taken by these devices, a situation iRobot said was not end products, but prototypes.

According to the company, these had a label with a green sticker that warned that they were recording and, after these recordings, the data was sent to Scale AI delete sensitive images and videos that did not correspond to the purpose of the recordings.

The consent and data processing agreements of the two companies have not been revealed, and although these are not final products, but tests, it highlights the danger of this type of device.

Many of these smart devices rely on machine learning and artificial intelligence to perform their functions and improve their performance over time, but it’s important to note that to do this collect audio and video data which help them improve their algorithm and give you better performance.

This is not to say that these devices are dangerous in themselves or that they will spy on you while you are at home, but it is true that after all these types of images and videos were supposed to they were going to be thrown by a human team

The responsibility in these situations should not lie with the user but with the company that processes the data, which is the one that must ensure maximum confidentiality for its customers.

In this case, ScaleAI uses data markers working remotely to classify images and make the smart vacuum learn based on the images it takes.

However, it is true that if you are worried that one of these devices could record you, it is better not to expose yourself in certain situations to them. Even so, it is worrying that the processing of data taken by smart home devices could be subject to theft.