Our processors have more and more cores inside, however, they will reach the point where the number of cores will stop climbing. But what are the factors that will slow down the number of cores in a CPU?

Why do we use multiple cores in a single processor?

Until the mid-2000s, PC processors were single-core, but by then, processors became multi-core.

To understand it, we must take into account what the Dennard scale is, the formula of which is as follows:

Consumption = Q * f * C * V2

Or:

- Q is the number of active transistors.

- f is the clock frequency

- C is the ability of transistors to maintain a charge

- V is the voltage

The magic of this formula is that if the size of the transistors is reduced, not only the density per area increases, but V and C decrease. This phenomenon got the name of Dennard Scale because it was invented by Robert H. Dennard, an IBM scientist who, among other things, invented DRAM memory.

The Dennard scale uses the S value as the relative value between the new node and the old one. If, for example, the old node was 10nm and the new one was 7nm, then the heat of S will be 0.7.

But the Dennard scale reached its limit when the 65nm node was reached, with which the post-Dennard era was entered, the main reason being the existence of part of the formula which until this time had been ignored because its effects were disposable: current leak.

What the formula looked like:

Consumption = Q * f * C * V2+ (V * Current leak).

The consequence was that Q, f and C maintained their scale values, but the voltage did not maintain it and it became almost constant. As clock speed changes with voltage, architects had to start looking for other ways to get faster and more powerful processors.

The consequence of this is that the era of single-core processor manufacturing has passed and the big PC processor manufacturers such as Intel and AMD have started betting on multi-core processors.

What is the limit on the number of cores?

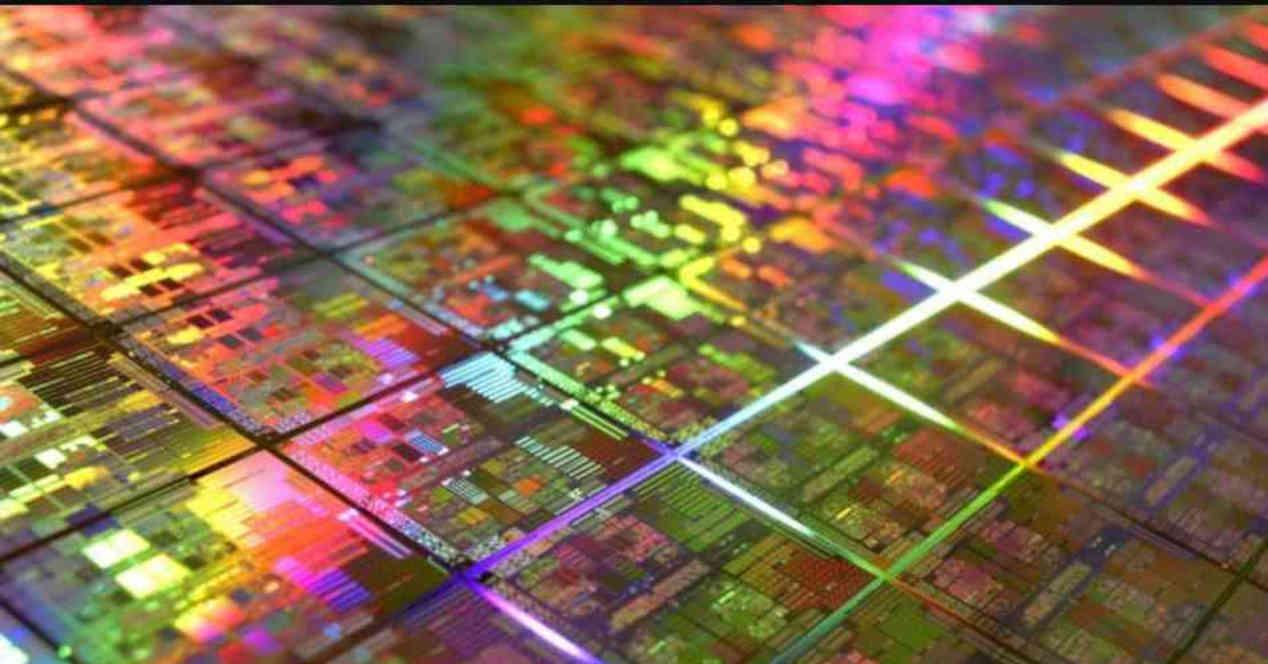

There are several factors that limit the number of cores a processor is built with, the first and most obvious being the density in terms of the number of transistors per area, which grows with each new node and therefore makes it possible to eliminate processors with many more and more cores.

But this is not the case and we are going to explain to you what are the challenges that architects face when designing new processors.

Internal communication between the different nuclei

There is a very important element which is the communication between the different cores, and of these with the memory, which grows exponentially with the number of elements that we have in a system.

This has forced engineers to work on increasingly complex communication structures, all so that the different cores of one processor receive and send data online with another processor.

The problem of black silicon

Black silicon is the part of the circuit in an integrated circuit that cannot be powered at a normal operating voltage (that determined by the chip) for a given power consumption limit.

The key is that the amount of black silicon from one node to another increases by 1 / S2 Thus, despite the fact that we can physically put twice as many transistors, the useful quantity of the latter decreases from one generation to the next.

The cost per zone is increasing, not decreasing

Up to 28nm the cost per 1mm2 of area has decreased, but beyond 28nm it has increased. This means that if the cost of the processors is to be maintained then they have to reduce the surface and if they want to keep up the pace of evolution instead then the processors will be more and more expensive.

It may seem unimportant in terms of the number of cores. Yes, this is because architects take into account the budget when it comes to transistors, the limiting factor of which is cost. This in turn is related to the cost per tranche.

Amdahl’s law: software does not adapt to the number of cores

Amdahl’s law was invented by Gene Amdahl, a computer scientist famous among other things for being the architect of the IBM / 360 system. Although Amdahl’s law is not a physical phenomenon, we are reminded that the whole load working of a computer program cannot be parallelized.

The consequences of this are that while some parts of the work will increase with the number of cores in the system, other parts, on the other hand, cannot scale in parallel as they work in series and depend on the power of each processor to the ladder. . Thus, workloads that cannot be run in parallel do not increase in speed when it comes to the number of cores.

This is the reason why architects, instead of trying to put more and more cores in processors, design new architectures, more and more efficient. The main purpose of designs is to reduce the time that processors alone take to execute certain instructions.

On the other hand, it must be taken into account that the software is designed according to the hardware in the market, most of the current software is optimized for the average number of cores people have on their PCs and although there is a certain degree of scalability, it’s never linear but rather logarithmic, so it gets to the point where some applications, no matter how many cores a processor has or even how fast it is, won’t scale much more or scale so slowly as before.

Table of Contents