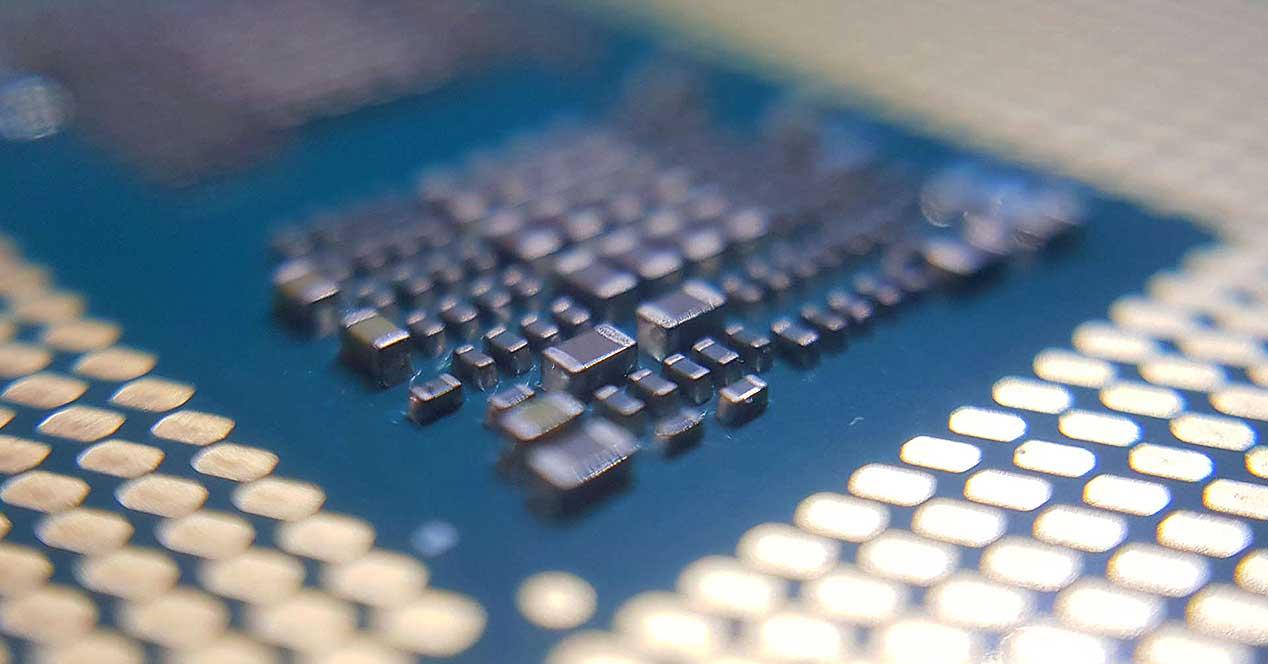

Cache memory was first implemented in the Intel 80486, but its origins can be traced back to IBM S / 360 where the idea of cache memory was first implemented. Today, due to the gap between CPU, GPU and other processors with memory, it has become an indispensable part of every processor.

Why is the cache needed?

Cache memory is needed because RAM memory is too slow for a CPU to execute its instructions with sufficient speed and we cannot speed it up anymore. The solution? Adding a good internal memory in the processor which allows you to zoom in on the latest data and instructions.

The problem is, this is extremely complex, as it forces the programs themselves to do this, which increases CPU cycles. The solution? Create a memory with a mechanism that copies the data and instructions closest to what is being executed.

Because the cache is inside the processor, once the processor finds the data inside it will perform it much faster than if it had natural RAM access.

How does the cache work?

First of all, we have to keep in mind that the cache is not part of RAM memory and does not function as such, moreover, it cannot be controlled like RAM where programs can occupy and free up memory whenever they want when they need it. . The reason? The cache works completely separately from the RAM.

The job of the cache is to move data from memory to the processor. The usual thing in a program is that the code is executed in sequence, i.e. if the current instruction is on line 1000 then the next one will be in 1001 unless it is a jump instruction. The idea of caches? Well, transfer some of the data and instructions to an internal memory of the processor.

When the CPU or GPU is looking for a data or an instruction, the first thing to do is to look at the cache closest to the processor and therefore the one with the lowest level to increase until it reaches the expected data. . The idea is that you don’t need to access the memory.

Cache Levels on CPU and GPU

In a multi-core system where we have two or more cores, we find that all of them are accessing the same RAM well, there is a single interface for memory, and multiple processors fighting to access it. It is at this stage that an additional cache level must be created, which communicates with the memory controller and this with the higher cache levels.

Normally, multicore processors usually have two cache levels, but in some designs we have clusters, which are based on groups of multiple processors with shared L2 cache, but share space with other clusters, this which sometimes requires the inclusion of a third level cache.

Although this is not common, level 3 caches appear whenever the memory interface is a big enough bottleneck that adding another level in the hierarchy improves performance.

The hierarchy of memory

The memory hierarchy rules are very clear, it starts from the processor registers and ends in the slowest memory of the same and always follows the same rules:

- The current level of the hierarchy has more capacity than the previous one but less than the next.

- As we get further away from the CPU, instruction latency increases.

- As we move away from the processor, the bandwidth with the data decreases.

In the specific case of cache levels, they store smaller and smaller information but still contain a fragment of the next level. Thus, the L1 cache is a subset of the data from the L2 cache which in turn is a superset of the data from the L1 cache and a subset of the data from the L3 cache, if applicable.

However, the last level cache, the one closest to memory, is not a subset of RAM, but only a copy of the memory page or a set of these closest to the processor.

Cache missing or when data is not found

One of the biggest performance issues occurs when a missing cache occurs when data is not found at the cache level. This is extremely dangerous for the performance of a failed processor as the consequence is a lot of wasted processor cycles, but it is no less dangerous for a failed processor.

For the design of a processor, the fact that the sum total of the search time of all Cache Miss combined with the search time is greater than the search for data directly in the cache is a failure. Many CPU designs have had to revert to the design table due to the fact that the seek time is longer than accessing RAM.

This is why architects are very reluctant to add additional levels in an architecture because yes, it must be justified in the face of an improvement in performance.

Consistency with memory

Because the cache contains copies of the data from the RAM, but it is not the RAM itself, there is a risk that the data will not match, not only between the cache and the RAM, but also between them. different cache levels where some are separated.

It is for this reason that mechanisms are necessary, responsible for maintaining the consistency of data at all levels with each other. Something that is assumes the implementation of an extremely complex system which increases with the number of processor cores.

Table of Contents